These questions were asked and mostly answered during our webcast, Storage Trends in 2023 and Beyond. Graphics included in this article were shown during the webcast, and several of the questions refer to the data in the charts.

Thank you to our panelists:

Don Jeanette, Vice President, TRENDFOCUS

Patrick Kennedy, Principal Analyst, ServeTheHome

Rick Kutcipal, At-Large Director, SCSI Trade Association and Product Planner, Data Center Solutions Group, Broadcom

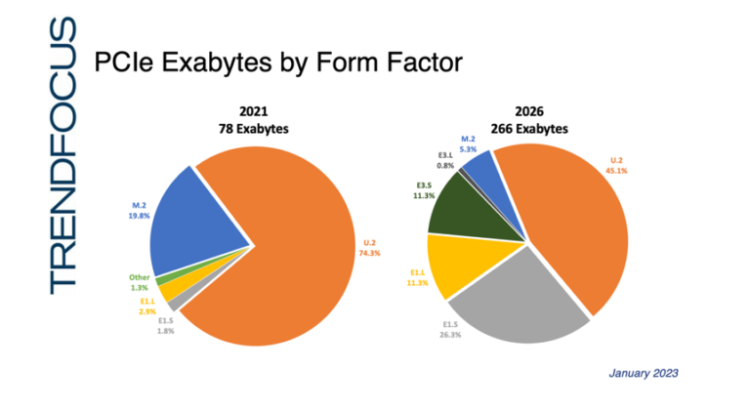

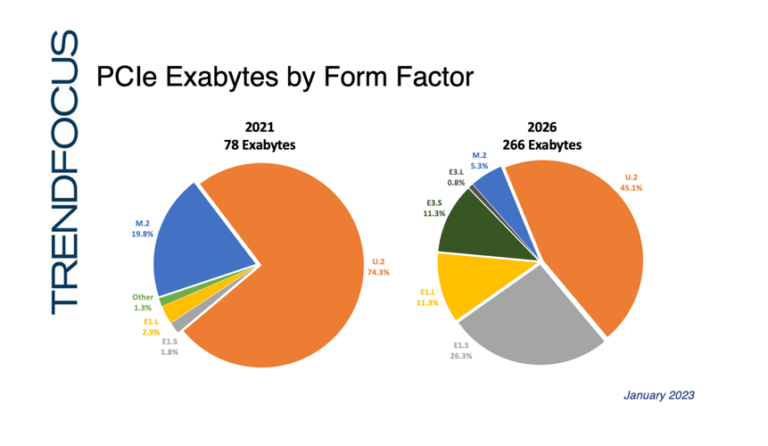

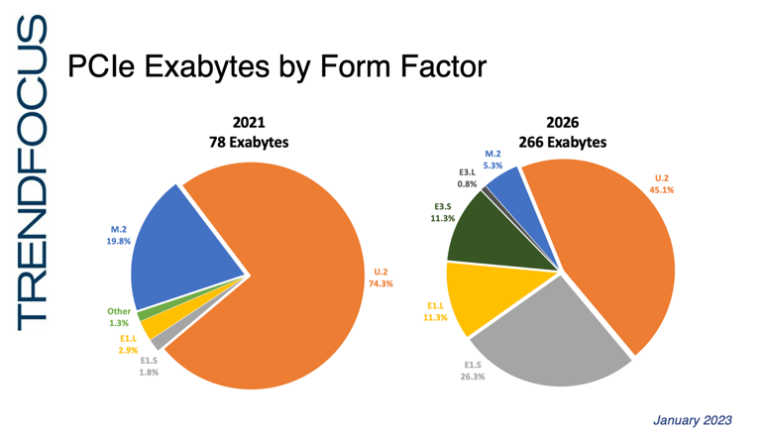

Q1: What does the future hold for U.3? (SFF-TA-1001) Was it included in the U.2 numbers?

U.2 should be U.X in the pie chart. There are some shipments out there today, customers are taking it, and it will likely grow — but all the efforts and priorities are really E3S, E1S and then, to some extent, E1,L.

Q2: How do number of units compare to exabytes shipped?

For hard drives, the sweet spot, for nearline SATA or SAS it’s about 18 terabytes today. For SSDs, we’ve been running at a terabyte for SATA for several years now; SAS we’re averaging about 3.6 to 3.9 terabytes today.

PCIe, interestingly enough, is lower than SAS today, but with these new form factors that we keep talking about, the average cap for PCIe is going to really pop up because of the data center implementations with E1S, one terabyte is going to 2, 4 and 8; ruler, or E1L, is 16 today going to 32TB. U.2 today average capacity is about 6 terabytes, that’s going to 8 and then 16 down the road. That’s why we always look at exabytes because that’s where pretty much every vendor and customer is looking at what they are going to buy, how much storage. As the capacities go up, the units flatten out in the forecast. You could have a potential dip in late 2024 to 2025 in units because you have some big migrations going to new form factors, where it’s going to go 4X in some cases. One terabyte goes to four terabytes.

Total units are 16 million for one quarter vs 39 exabytes. More than 2X on the exabytes for units.

Q3: What needs to be true for SSDs to make significant gains into the HDD share of the market?

10K and 15K HDDs are pretty much dead already, and we said a lot about SSDs going into nearline. They will shave off, and you have some storage RAID companies giving effort, to try to sell their solid-state storage systems and try to cut into that nearline hard drive markets. They are going to shave off a little bit off that top layer of high capacity nearline-based systems, the ones that are still priced higher, where they can start to get to a delta and a price point differential. You might see some elasticity of certain customers moving over but we will not see a major transition in the near term.

10K and 15K HDDs have struggled, because folks realize that SSDs are faster, but there are other things like for example, boot drives. Boot drives used to be hard drives. Now they are M.2 drives. Some of the applications that we are seeing – boot drives that were hard drives – and we also look at some of the applications that are even beyond just the traditional data centers.

One good example of that is video data analytics at the edge. Many are building systems now and they’re currently hard drive-based systems; they’ll deploy them at the edge, they will inject data, put it onto an array of hard drives, usually have AI acceleration, be able to do inference on those video feeds live at the box, and for any anomalies push them up to the cloud.

Almost all of those boxes are currently hard drive boxes. What you will see as the SSD capacities grow, there are companies out there that look at things like – if you have an edge box, if you have one that’s on every street corner, every retail location – rolling a truck to fix a hard drive in that kind of application is difficult – there’s power, there’s size constraints, and so adding things like SSDs, especially as they start to eclipse hard drives in capacity, gives a new specific type of application that folks can go after, not just the high performance segment but also high capacity in a more compact space.

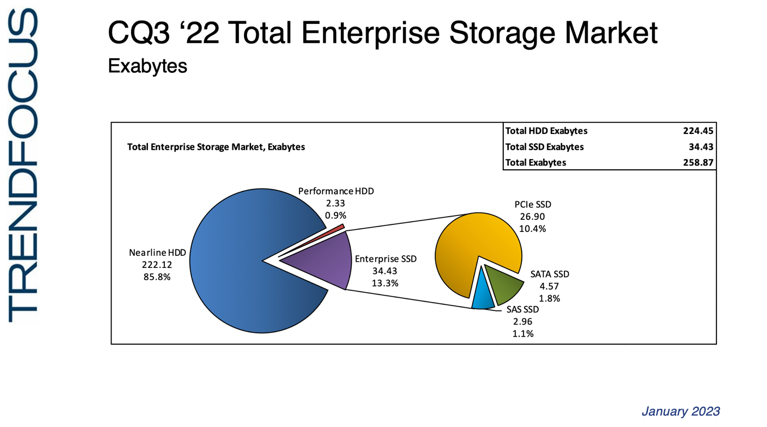

Q4: Do your numbers for SAS include both HDD and SSD?

(NOTE TO READERS: This data comes from TRENDFOCUS analyst firm, specifically from VP Don Jeanette. You are welcome to contact them if you are interested in purchasing their reports.)

The hard drive number in our slides presented, of the 255 exabytes, it’s about a 75-25 split in favor of SATA. Of the enterprise SSDs, SATA is about 5 exabytes a quarter, about 3 to 4 exabytes a quarter on SAS, and then the bulk is PCIe: 24 to 26 exabytes.

Q5: When you say “Enterprise” does that include the Cloud Service Providers? Or is that a separate category.

Yes, CSPs are included in Enterprise. We distinguish Enterprise & CSP from Client.

Historically at the product level there are two buckets: enterprise or client. When someone says enterprise, I say what are their end markets – traditional end market or data center/ hyperscale end market? So, Products vs. Markets. Enterprise for me, at the device level, still encompasses both of those markets, but then if they want to go further, depending on where those products go, it’s data center / traditional enterprise market.

From my perspective, that categorization is totally right. Initially, there was client and enterprise. Because of Cloud Service Providers have become such a big market, in themselves, we tend to say data center versus enterprise. There are even some pockets of folks that operate at a smaller than hyperscale size but operate and build infrastructure that’s much more like a hyperscaler would do it versus a traditional enterprise.

Another way to say it is that sometimes we talk about data center class SSDs, enterprise class SSDs, and a delineating point would be level of qualification.

Q6: What is the cost difference between HDD vs SDD? And do you see the cost parity getting closer?

For HDDS, we are at 1+ penny a gigabyte in nearline today. It’s not going down 30 or 40% a quarter a year, depending on supply, like we are seeing in NAND today. You can go 40% in two quarters on NAND or SSD, but it might jump back up another 20 or 30% depending on which way the wind blows.

It will continue to go down. For SSDs, if we are at sub ten cents a gigabyte today on average, plus or minus, it’s going to go down though — even with QLC implementations in certain spots; but my answer to “same price” is probably never. Even if that gets cut in half, it’s going to be tough for a lot of teams out there to say let’s do it. I know a lot of times product specifications win on paper when it comes to comparisons, but at the device brick level, you ask any procurement team in any company in the world, and it would have to be nearly the same price to transition from one technology to the other.

We need to remember that the three remaining HDD vendors are still investing very heavily in capacity optimized technologies. We’ve seen incremental improvements with things like SMR. On the horizon we’re watching (HAMR) heat-assisted magnetic recording – if and when HAMR happens, that could be a real boost on the HDD side. So very active playing field.

Three metrics: HAMR, SMR and we’re still adding components. Yesterday we’re shipping nearline hard drives at 4 or 5 platters, we’re at 9 or 10 now, and we are discussing going to 11. They always find a way. For the three vendors left, it constitutes the majority of what they have, so they are going to do everything in their power to keep that competitive edge.

Q7: Why are hyperscalers still planning to use SAS for storage?

In a modern server, for example a single socket Sapphire rapid SuperMicro server, we talked about the PCI Gen 5 lanes being so much faster than PCI Gen 3 lanes. When you have a SAS controller, you can handle so many more hard drives for a given lane of PCIe bandwidth, and a hard drive can actually feed it. An interesting way to think about it is a PCIe x 16 slot now has more bandwidth on a server like this than you had in an entire server a couple of years ago. The architecture that we think of with SAS, one of the biggest reasons for it is you can put a lot of storage behind it, and two, the fact is that the dual port SAS (especially if you are running a multi home solution) there are dual port hard drives or dual port NVMe drives, – not all of them are dual port, even the enterprise drives.

Scalability is a main driver for hyperscalers using SAS. The scale of some of these machines is absolutely enormous. It’s about their nearline capacity, nearline storage and the scale of it.

Another primary driver is dollars per gigabyte. How are you going to store all this stuff? Think about the amount of capacity that must be in a hyperscale data center. It’s absolutely enormous. If they have to pay a dollar more per drive and buy ten million drives a year, that’s ten million dollars off their bottom line.

From a storage device level, SAS hard drives and SSDs: more throughput, dual port vs single port, better ECC, some technical and reliability metrics that would favor SAS over SATA.

Additionally, another key consideration is supporting these storage requirements from a production perspective. The entire NAND industry would do currently about 170 exabytes on a given quarter. You must take care of every PC out there, all these enterprise SSDs we’re talking about now, you have to take care of every smart phone in the world which consumes about 33-35% of the NAND in the quarter, and then all those other applications DIY, embedded, spot market stuff. Nearline in the quarter we just talked about did 250 exabytes alone. Production support for that is not insignificant.

Q8: What do you think will be the biggest storage challenge for 2023?

(Storage Influencer Perspective) Biggest storage challenge for 2023 – the newest generation of servers, the consolidation ratios, it’s like a hockey stick in terms of people were used to getting generation on generation maybe one and a half previous Gen servers to a current Gen server and now we’re talking like two, three, 4X; and so the idea of how do you balance and how do you rethink your storage architecture. I think is a real challenge for folks – not just in the servers themselves but you know a 400G NIC gives you the ability to have an entire 2017 generation servers’ worth of PCIe bandwidth over a NIC, – and what does that do for an architecture and how do you build with that? So, that’s my biggest storage challenge.

(Data Storage Analyst Perspective) Two things – one technical, one business. PCIe Gen 5 is obviously the hot topic today. I hear expectations from certain companies, I know it’s going to go, but my response is always you know it never goes the way you plan, it’s going to get pushed out, percentage lower than the volumes you think you are going to do.

Number two, on the business case, my concern for 2023 is the health of various companies out there, because of the current environment we are in, and what some of these storage devices are being sold at, so that’s a legitimate concern on “What’s the landscape today in January of 2023” versus what are we talking about in December 2023, because we’ve had three quarters of very tough times, and we have multiple quarters ahead. It is going to be interesting how certain companies navigate these waters.

(Manufacturer / Supplier Perspective) I break mine up into two pieces – this is coming from an infrastructure play, and that’s what I’m very focused on. One, meeting the demands of the scale required by our data center customers. How do we provide that scale? It’s more than just how many things can we hook up. It’s making them all work well together, providing the appropriate quality of service, spin up of large systems – it’s very complicated. The second one is signal integrity around PCIe Gen 5. That’s from an infrastructure perspective, that’s a big challenge that the whole ecosystem is facing, and that’s going to be a very focused effort in 2023.

Q9: Your 2021 vs 2026 Exabyte slide: is that only SSD or includes HDD exabytes?

(NOTE TO READERS: This data comes from TRENDFOCUS analyst firm, specifically from VP Don Jeanette. You are welcome to contact them if you are interested in purchasing their reports.)

The exabytes referred to in that slide (graphic below) are for SSD.

Q11: Is there a difference in performance with U.2 and U.3?

No, there is no difference in performance between U.2 and U.3. Both are the same 2.5” form factor.

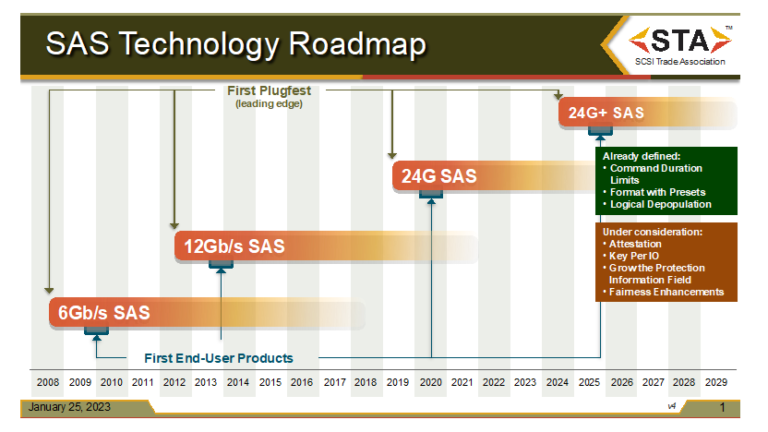

Q12: Is there going to be a 48G SAS, and/or what is status of 24G+ SAS? What are the challenges with the next version of Serial Attached SCSI?

Based on the current market demands, we believe that the SAS-4 physical layer, 24G SAS (and, 24G+ SAS) satisfies performance requirements. 24G+ SAS includes emerging requirements (see technology roadmap graphic below, which includes detailed call out for 24G+ SAS).

From a technical standpoint, it is achievable, but we will go to 48G SAS as the market demands.

Q13: Will there be NVMe hard drives soon or will HDDs always be SAS/SATA behind a SAS infrastructure?

OCP is working on an NVMe hard drive specification. According to market data, the vast majority of HDDs will be connected behind a SAS infrastructure.

Q15: Do you have any data of the trends on NVMe RAID?

No, we do not have data of trends with regards to NVMe RAID. The market data discussed in this webcast comes from TRENDFOCUS analyst firm, specifically their VP Don Jeanette. You are welcome to contact Don if you are interested in purchasing their reports.

Q16: Do you have any data on the SAS-4 SSD deployment in 2023?

No, we do not have that data. The market data discussed in this webcast comes from TRENDFOCUS analyst firm, specifically their VP Don Jeanette. You are welcome to contact Don if you are interested in purchasing their reports.