At our recent SNIA Webcast “Data Protection and OpenStack Mitaka,” Ben Swartzlander, Project Team Lead OpenStack Manila (NetApp), and Dr. Sam Fineberg, Distinguished Technologist (HPE), provided terrific insight into data protection capabilities surrounding OpenStack. If you missed the Webcast, I encourage you to watch it on-demand at your convenience. We did not have time to get to all of out attendees’ questions during the live event, so as promised, here are answers to the questions we received.

Q. Why are there NFS drivers for Cinder?

A. It’s fairly common in the virtualization world to store virtual disks as files in filesystems. NFS is widely used to connect hypervisors to storage arrays for the purpose of storing virtual disks, which is Cinder’s main purpose.

Q. What does “crash-consistent” mean?

A. It means that data on disk is what would be there is the system “crashed” at that point in time. In other words, the data reflects the order of the writes, and if any writes are lost, they are the most recent writes. To avoid losing data with a crash consistent snapshot, one must force all recently written data and metadata to be flushed to disk prior to snapshotting, and prevent further changes during the snapshot operation.

Q. How do you recover from a Cinder replication failover?

A. The system will continue to function after the failover, however, there is currently no mechanism to “fail-back” or “re-replicate” the volumes. This function is currently in development, and the OpenStack community will have a solution in a future release.

Q. What is a Cinder volume type?

A. Volume types are administrator-defined “menu choices” that users can select when creating new volumes. They contain hidden metadata, in the cinder.conf file, which Cinder uses to decide where to place them at creation time, and which drivers to use to configure them when created.

Q. Can you replicate when multiple Cinder backends are in use?

A. Yes

Q. What makes a Cinder “backup” different from a Cinder “snapshot”?

A. Snapshots are used for preserving the state of a volume from changes, allowing recovery from software or user errors, and also allowing a volume to remain stable long enough for it to be backed up. Snapshots are also very efficient to create, since many devices can create them without copying any data. However, snapshots are local to the primary data and typically have no additional protection from hardware failures. In other words, the snapshot is stored on the same storage devices and typically shares disk blocks with the original volume.

Backups are stored in a neutral format which can be restored anywhere and typically on separate (possibly remote) hardware, making them ideal for recovery from hardware failures.

Q. Can you explain what “share types” are and how they work?

A. They are Manila’s version of Cinder’s volume types. One key difference is that some of the metadata about them is not hidden and visible to end users. Certain APIs work with shares of types that have specific capabilities.

Q. What’s the difference between Cinder’s multi-attached and Manila’s shared file system?

A. Multi-attached Cinder volumes require cluster-aware filesystems or similar technology to be used on top of them. Ordinary file systems cannot handle multi-attachment and will corrupt data quickly if attached more than one system. Therefore cinder’s multi-attach mechanism is only intended for fiesystems or database software that is specifically designed to use it.

Manilla’s shared filesystems use industry standard network protocols, like NFS and SMB, to provide filesystems to arbitrary numbers of clients where shared access is a fundamental part of the design.

Q. Is it true that failover is automatic?

A. No. Failover is not automatic, for Cinder or Manila

Q. Follow-up on failure, my question was for the array-loss scenario described in the Block discussion. Once the admin decides the array has failed, does it need to perform failover on a “VM-by-VM basis’? How does the VM know to re-attach to another Fabric, etc.?

A. Failover is all at once, but VMs do need to be reattached one at a time.

Q. What about Cinder? Is unified object storage on SHV server the future of storage?

A. This is a matter of opinion. We can’t give an unbiased response.

Q. What about a “global file share/file system view” of a lot of Manila “file shares” (i.e. a scalable global name space…)

A. Shares have disjoint namespaces intentionally. This allows Manila to provide a simple interface which works with lots of implementations. A single large namespace could be more valuable but would preclude many implementations.

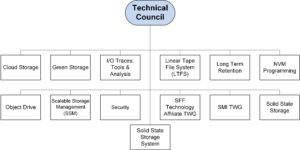

Mark Carlson is the current Chair of the SNIA Technical Council (TC). Mark has been a SNIA member and volunteer for over 18 years, and also wears many other SNIA hats. Recently, SNIA on Storage sat down with Mark to discuss his first nine months as the TC Chair and his views on the industry.

Mark Carlson is the current Chair of the SNIA Technical Council (TC). Mark has been a SNIA member and volunteer for over 18 years, and also wears many other SNIA hats. Recently, SNIA on Storage sat down with Mark to discuss his first nine months as the TC Chair and his views on the industry.