Earlier this month, the SNIA Cloud Storage Technologies Initiative (CSTI) presented a live webcast called “High Performance Storage at Exascale” where our HPC experts, Glyn Bowden, Torben Kling Petersen and Michael Hennecke talked about processing and storing data in shockingly huge numbers. The session raises some interesting points on how scale is quickly being redefined and what was cost compute prohibitive a few years ago for most, may be in reach for all sooner than expected.

- Is HPC a rich man’s game? The scale appears to have increased dramatically over the last few years. Is the cost increasing to the point where this has only for wealthy organizations or has the cost decreased to the point where small to medium-sized enterprises might be able to indulge in HPC activities?

- [Torben] I would say the answer is both. To build these really super big systems you

need you need hundreds of millions of dollars because the sheer cost of infrastructure goes beyond anything that we’ve seen in the past, but on the other hand you also see HPC systems in the most unlikely places, like a web retailer that mainly sells shoes. They had a Lustre system driving their back end and HPC out-competed a standard NFS solution. So, we see this going

in different directions. Price has definitely gone down significantly; essentially the cost of a large storage system now is the same as it was 10 years ago. It’s just that now it’s 100 times faster and 50 times larger. That said, it’s not it’s not cheap to do any of these things because of the amount of hardware you need.

- [Michael] We are seeing the same thing. We like to say that these types of HPC systems are more like a time machine that show you what will show up in the general enterprise world a few years after. The cloud space is a prime example. All of the large HPC parallel file systems are now being adopted in the cloud so we get a combination of the deployment mechanisms coming from the cloud world with the scale and robustness of the storage software infrastructure. Those are married together in very efficient ways. So, while not everybody will

build a 200 petabyte or flash system for those types of use cases the same technologies and the same software layers can be used at all scales. I really believe that this is a like the research lab for what will become mainstream pretty quickly. On the cost side, another aspect that we haven’t covered is this old notion that tape is dead, disk is dead, and always the next technology is replacing the old ones. That hasn’t happened, but certainly as new technologies

arrive and cost structures change you get shifts in dollars per terabyte or dollars per terabyte per second which is more the HPC metric. So, how do we get in QLC drives to lower the price of flash and then build larger systems out of that? That’s also technology explorations done at this level and then benefit everybody.

- [Glyn] Being the consultant of the group, I guess I should say it depends. It depends on how you want to define HPC. So, I’ve got a device on my desk in front of me at the moment that I can fit in the palm of my hand it has more than a thousand graphics GPU cores in it and so

that costs under $100. I can build a cluster of 10 of those for under $1,000. If you look back five years, that would absolutely be classified as HPC based on the amount of cores and amount of processing it can do. So, these things are shrinking and becoming far more affordable and far more commodity at the low end meaning that we can put what was traditionally sort of a

an HPC cluster and run it on things like Raspberry Pi’s at the edge somewhere. You can absolutely get the architecture and what was previously seen as that kind of parallel batch processing against many cores for next to nothing. As Michael said it’s really the time machine and this is where we’re catching up with what was an HPC. The big stuff is always going to cost the big bucks, but I think it’s affordable to get something that you can play on and work as an HPC system.

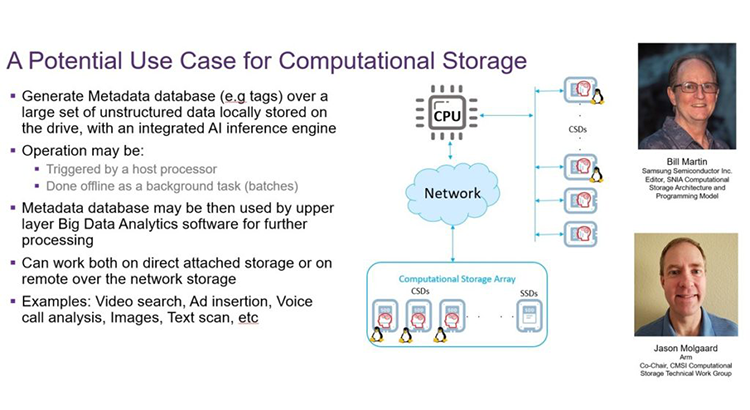

We also had several questions on persistent memory. SNIA covers this topic extensively. You can access a wealth of information here. I also encourage you to register for SNIA’s 2022 Persistent Memory + Computational Storage Summit which will be held virtually May 25-26. There was also interest in CXL (Compute Express Link, a high speed cache-coherent interconnect for processors, memory and accelerators). You can find more information on that in the SNIA Educational Library.

) 2.0

) 2.0