Category: Solid State Storage

The Too Proud to Ask Train Makes Another Stop: Where Does My Data Go?

Your Questions Answered on Non-Volatile DIMMs

by Arthur Sainio, SNIA NVDIMM SIG Co-Chair, SMART Modular

SNIA’s Non-Volatile DIMM (NVDIMM) Special Interest Group (SIG) had a tremendous response to their most recent webcast: NVDIMM: Applications are

by Arthur Sainio, SNIA NVDIMM SIG Co-Chair, SMART Modular

SNIA’s Non-Volatile DIMM (NVDIMM) Special Interest Group (SIG) had a tremendous response to their most recent webcast: NVDIMM: Applications are Here! You can view the webcast on demand.

Viewers had many questions during the webcast. In this blog, the NVDIMM SIG answers those questions and shares the SIG’s knowledge of NVDIMM technology.

Have a question? Send it to nvdimmsigchair@snia.org.

1. What about 3DXpoint, how will this technology impact the market? Read More

Here! You can view the webcast on demand.

Viewers had many questions during the webcast. In this blog, the NVDIMM SIG answers those questions and shares the SIG’s knowledge of NVDIMM technology.

Have a question? Send it to nvdimmsigchair@snia.org.

1. What about 3DXpoint, how will this technology impact the market? Read More

How Many IOPS? Users Share Their 2017 Storage Performance Needs

New on the Solid State Storage website is a whitepaper from analysts Tom Coughlin of Coughlin Associates and Jim Handy of Objective Analysis which details what IT manager requirements are for storage performance. The paper examines how requirements have changed over a four-year period for a range of applications, including databases, online transaction processing, cloud and storage services, and scientific and engineering computing. Read More

New on the Solid State Storage website is a whitepaper from analysts Tom Coughlin of Coughlin Associates and Jim Handy of Objective Analysis which details what IT manager requirements are for storage performance. The paper examines how requirements have changed over a four-year period for a range of applications, including databases, online transaction processing, cloud and storage services, and scientific and engineering computing. Read More

Latency Budgets for Solid State Storage Access

New solid state storage technologies are forcing the industry to refine distinctions between networks and other types of system interconnects. The question on everyone’s mind is: when is it beneficial to use networks to access solid state storage, particularly persistent memory?

It’s not quite as simple as a “yes/no” answer. The answer to this question involves application, interconnect, memory technology and scalability factors that can be analyzed in the context of a latency budget.

On April 19th, Doug Voigt, Chair SNIA NVM Programming Model Technical Work Group, returns for a live SNIA Ethernet Storage Forum webcast, “Architectural Principles for Networked Solid State Storage Access – Part 2” where we will explore latency budgets for various types of solid state storage access. These can be used to determine which combinations of interconnects, technologies and scales are compatible with Load/Store instruction access and which are better suited to IO completion techniques such as polling or blocking.

In this webcast you’ll learn:

- Why latency is important in accessing solid state storage

- How to determine the appropriate use of networking in the context of a latency budget

- Do’s and don’ts for Load/Store access

This is a technical seminar built upon part 1 of this series. If you missed it, you can view it on demand at your convenience. It will give you a solid foundation on this topic, outlining key architectural principles that allow us to think about the application of networked solid state technologies more systematically.

I hope you will register today for the April 19th event. Doug and I will be on hand to answer questions on the spot.

Attend Live – or Live Stream – SNIA’s Persistent Memory Summit January 18

by Marty Foltyn

SNIA’s Persistent Memory Summit makes its fifth annual appearance in Silicon Valley next Wednesday, January 18, and if you are in the vicinity of the Westin San Jose, you owe it to yourself to check it out.

SNIA is well known for its technology-focused, no vendor-hype conferences, and this one-day event will feature 12 presentations and two panels that will “level set” the discussion, review persistent memory usage, describe applications incorporating PM available today, discuss the infrastructure and implementation, and provide a vision of the “next generation” of persistent memory.

You’ll meet speakers from SNIA member companies Intel, Micron, Microsemi, VMware, Red Hat, Microsoft, AgigA Tech, Western Digital, and Spin Transfer. Live demonstrations of persistent memory solutions will be featured from Summit underwriters Intel and the SNIA Solid State Storage Initiative, and Summit sponsors Microsemi, VMware, AgigA Tech, SMART Modular, and Spin Transfer.

Registration is complimentary but limited -visit http://www.snia.org/pm-summit for the complete agenda and how to sign up. And, if your travels don’t permit you to attend in person, the Persistent Memory Summit will be live-streamed on the SNIAvideo channel at https://www.youtube.com/user/SNIAVideo.

SNIA Storage Developer Conference-The Knowledge Continues

SNIA’s 18th Storage Developer Conference is officially a success, with 124 general and breakout sessions; Cloud Interoperability, Kineti c Storage, and SMB3 plugfests; ten Birds-of-a-Feather Sessions, and amazing networking among 450+ attendees. Sessions on NVMe over Fabrics won the title of most attended, but Persistent Memory, Object Storage, and Performance were right behind. Many thanks to SDC 2016 Sponsors, who engaged attendees in exciting technology discussions.

c Storage, and SMB3 plugfests; ten Birds-of-a-Feather Sessions, and amazing networking among 450+ attendees. Sessions on NVMe over Fabrics won the title of most attended, but Persistent Memory, Object Storage, and Performance were right behind. Many thanks to SDC 2016 Sponsors, who engaged attendees in exciting technology discussions.

For those not familiar with SDC, this technical industry event is designed for a variety of storage technologists at various levels from developers to architects to product managers and more. And, true to SNIA’s commitment to educating the industry on current and future disruptive technologies, SDC content is now available to all – whether you attended or not – for download and viewing.

You’ll want to stream keynotes from Citigroup, Toshiba, DSSD, Los Alamos National Labs, Broadcom, Microsemi, and Intel – they’re available now on demand on SNIA’s YouTube channel, SNIAVideo.

You’ll want to stream keynotes from Citigroup, Toshiba, DSSD, Los Alamos National Labs, Broadcom, Microsemi, and Intel – they’re available now on demand on SNIA’s YouTube channel, SNIAVideo.

All SDC presentations are now available for download; and over the next few months, you can continue to download SDC podcasts which combine audio and slides. The first podcast from SDC 2016 – on hyperscaler (as well as all 2015 SDC Podcasts) are available here, and more will be available in the coming weeks.

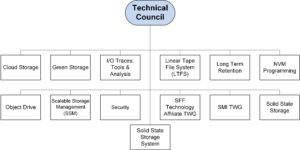

SNIA thanks all its members and colleagues who contributed to make SDC a success! A special thanks goes out to the SNIA Technical Council, a select group of acknowledged industry experts who work to guide SNIA technical efforts. In addition to driving the agenda and content for SDC, the Technical Council oversees and manages SNIA Technical Work Groups, reviews architectures submitted by Work Groups, and is the SNIA’s technical liaison to standards organizations. Learn more about these visionary leaders at http://www.snia.org/about/organization/tech_council.

And finally, don’t forget to mark your calendars now for SDC 2017 – September 11-14, 2017, again at the Hyatt Regency Santa Clara. Watch for the Call for Presentations to open in February 2017.

The Changing World of SNIA Technical Work – A Conversation with Technical Council Chair Mark Carlson

Mark Carlson is the current Chair of the SNIA Technical Council (TC). Mark has been a SNIA member and volunteer for over 18 years, and also wears many other SNIA hats. Recently, SNIA on Storage sat down with Mark to discuss his first nine months as the TC Chair and his views on the industry.

Mark Carlson is the current Chair of the SNIA Technical Council (TC). Mark has been a SNIA member and volunteer for over 18 years, and also wears many other SNIA hats. Recently, SNIA on Storage sat down with Mark to discuss his first nine months as the TC Chair and his views on the industry.

SNIA on Storage (SoS): Within SNIA, what is the most important activity of the SNIA Technical Council?

Mark Carlson (MC): The SNIA Technical Council works to coordinate and approve the technical work going on within SNIA. This includes both SNIA Architecture (standards) and SNIA Software. The work is conducted within 13 SNIA Technical Work Groups (TWGs). The members of the TC are elected from the voting companies of SNIA, and the Council also includes appointed members and advisors as well as SNIA regional affiliate advisors.

SoS: What has been your focus this first nine months of 2016?

MC: The SNIA Technical Council has overseen a major effort to integrate a new standard organization into SNIA. The creation of the new SNIA SFF Technology Affiliate (TA) Technical Work Group has brought in a very successful group of folks and standards related to storage connectors and transceivers. This work group, formed in June 2016, carries forth the longstanding SFF Committee work efforts that has operated since 1990 until mid-2016. In 2016, SFF Committee leaders transitioned the organizational stewardship to SNIA, to operate under a special membership class named Technology Affiliate, while retaining the long standing technical focus on specifications in a similar fashion as all SNIA TWGs do.

SoS: What changes did SNIA implement to form the new Technology Affiliate membership class and why?

MC: The SNIA Policy and Procedures were changed to account for this new type of membership. Companies can now join an Affiliate TWG without having to join SNIA as a US member. Current SNIA members who want to participate in a Technology Affiliate like SFF can join a Technology Affiliate and pay the separate dues. The SFF was a catalyst – we saw an organization looking for a new home as its membership evolved and its leadership transitioned. They felt SNIA could be this home but we needed to complete some activities to make it easier for them to seamlessly continue their work. The SFF is now fully active within SNIA and also working closely with T10 and T11, groups that SNIA members have long participated in.

SoS: Is forming this Technology Affiliate a one-time activity?

MC: Definitely not. The SNIA is actively seeking organizations who are looking for a structure that SNIA provides with IP policies, established infrastructure to conduct their work, and 160+ leading companies with volunteers who know storage and networking technology.

SoC: What are some of the customer pain points you see in the industry?

MC: Critical pain points the TC has started to address with new TWGs over the last 24 months include: performance of solid state storage arrays, where the SNIA Solid State Storage Systems (S4) TWG is working to identify, develop, and coordinate system performance standards for solid state storage systems; and object drives, where work is being done by the Object Drive TWG to identify, develop, and coordinate standards for object drives operating as storage nodes in scale out storage solutions. With the number of different future disk drive interfaces emerging that add value from external storage to in-storage compute, we want to make sure they can be managed at scale and are interoperable.

SoS: What’s upcoming for the next six months?

MC: The TC is currently working on a white paper to address data center drive requirements and the features and existing interface standards that satisfy some of those requirements. Of course, not all the solutions to these requirements will come from SNIA, but we think SNIA is in a unique position to bring in the data center customers that need these new features and work with the drive vendors to prototype solutions that then make their way into other standards efforts. Features that are targeted at the NVM Express, T10, and T13 committees would be coordinated with these customers.

SoS: Can non-members get involved with SNIA?

MC: Until very recently, if a company wanted to contribute to a software project within SNIA, they had to become a member. This was limiting to the community, and cut off contributions from those who were using the code, so SNIA has developed a convenient Contributor License Agreement (CLA) for contributions to individual projects. This allows external contributions but does not change the software licensing. The CLA is compatible with the IP protections that the SNIA IP Policy provides to our members. Our hope is that this will create a broader community of contributors to a more open SNIA, and facilitate open source project development even more.

SoS: Will you be onsite for the upcoming SNIA Storage Developer Conference (SDC)?

MC: Absolutely! I look forward to meeting SNIA members and colleagues September 19-22 at the Hyatt Regency Santa Clara. We have a great agenda, now online, that the TC has developed for this, our 18th conference, and registration is now open. SDC brings in more than 400 of the leading storage software and hardware developers, storage product and solution architects, product managers, storage product quality assurance engineers, product line CTOs, storage product customer support engineers, and in–house IT development staff from around the world. If technical professionals are not familiar with the education and knowledge that SDC can provide, a great way to get a taste is to check out the SDC Podcasts now posted, and the new ones that will appear leading up to SDC 2016.

Flash Memory Summit Highlights SNIA Innovations in Persistent Memory & Flash

SNIA and the Solid State Storage Initiative (SSSI) invite you to join them at Flash Memory Summit 2016, August 8-11 at the Santa Clara Convention Center. SNIA members and colleagues receive $100 off any conference package using the code “SNIA16” by August 4 when registering for Flash Memory Summit at  http://www.flashmemorysummit.com

http://www.flashmemorysummit.com

On Monday, August 8, from 1:00pm – 5:00pm, a SNIA Education Afternoon will be open to the public in SCCC Room 203/204, where attendees can learn about multiple storage-related topics with five SNIA Tutorials on flash storage, combined service infrastructures, VDBench, stored-data encryption, and Non-Volatile DIMM (NVDIMM) integration from SNIA member speakers.

Following the Education Afternoon, the SSSI will host a reception and networking event in SCCC Room 203/204 from 5:30 pm – 7:00 pm with SSSI leadership providing perspectives on the persistent memory and SSD markets, SSD performance, NVDIMM, SSD data recovery/erase, and interface technology. Attendees will also be entered into a drawing to win solid state drives.

SNIA and SSSI members will also be featured during the conference in the following sessions:

- Persistent Memory (Preconference Session C)

NVDIMM presentation by Arthur Sainio, SNIA NVDIMM SIG Co-Chair (SMART Modular)

Monday, August 8, 8:30am- 12:00 noon - Data Recovery of SSDs (Session 102-A)

SIG activity discussion by Scott Holewinski, SSSI Data Recovery/Erase SIG Chair (Gillware)

Tuesday, August 9, 9:45 am – 10:50 am - Persistent Memory – Beyond Flash sponsored by the SNIA SSSI (Forum R-21) Chairperson: Jim Pappas, SNIA Board of Directors Vice-Chair/SSSI Co-Chair (Intel); papers presented by SNIA members Rob Peglar (Symbolic IO), Rob Davis (Mellanox), Ken Gibson (Intel), Doug Voigt (HP), Neal Christensen (Microsoft) Wednesday, August 10, 8:30 am – 11:00 am

- NVDIMM Panel, organized by the SNIA NVDIMM SIG (Session 301-B) Chairperson: Jeff Chang SNIA NVDIMM SIG Co-Chair (AgigA Tech); papers presented by SNIA members Alex Fuqa (HP), Neal Christensen (Microsoft) Thursday, August 11, 8:30am – 9:45am

Finally, don’t miss the SNIA SSSI in Expo booth #820 in Hall B and in the Solutions Showcase in Hall C on the FMS Exhibit Floor. Attendees can review a series of updated performance statistics on NVDIMM and SSD, see live NVDIMM demonstrations, access SSD data recovery/erase education, and preview a new white paper discussing erasure with regard to SSDs. SNIA representatives will also be present to discuss other SNIA programs such as certification, conformance testing, membership, and conferences.

Principles of Networked Solid State Storage – Q&A

At this month’s SNIA Ethernet Storage Forum Webcast, “Architectural Principles for Networked Solid State Storage Access,” Doug Voigt, Chair of the SNIA NVM Programming Technical Working Group, and a member of the SNIA Technical Council, outlined key architectural principles surrounding the application of networked solid state technologies. We had a flurry of questions near the end of the Webcast that we did not have enough time to answer. Here are Doug’s answers to all the questions we received during the event:

Q. Are there wait cycles in accessing persistent memory?

A. It depends entirely on which persistent memory (PM) technology is being accessed and how the memory interconnect is used. Some technologies have write times that are quite different from read times. When using tightly timed interconnects such as DDR with those technologies it may be difficult to avoid wait cycles.

Q. How do Pmalloc and malloc share the virtual address space of the application?

A. This is entirely up to the OS and other libraries operating within any constraints of the processor architecture-specific memory management units. A good mental model would be fairly large regions of contiguous address space in both the physical and virtual domains, where each region will comprise a single type of memory. Capacity will be reserved for pmalloc and malloc in the appropriate regions.

Q. Always flush after doing your memory-mapped IO. Is that simply good hygiene?

A. Not exactly. The term “Memory Mapped IO” is used to reference control plane (as opposed to data plane) access. It is often reasonable to set up control plane memory as uncacheable. The need for strict order of access to physical control plane registers is so pervasive that caching is generally not useful. Uncacheable writes are always flushed by the processor, as opposed to the application.

Generally with memory mapped IO devices the data plane uses direct memory access (DMA). With memory mapped files (as opposed to memory mapped IO) Load/Store (more commonly referred to as “Ld/St”), not DMA, is used in the data plane. Disabling caching in the data plane is generally a big performance sacrifice for small byte range access.

In the Ld/St datapath, strategically placed flushing is required to retain both performance and power failure recovery. The SNIA NVM Programming Model describes this type of functionality.

Q. Once NVDIMM support become pervasive with support from NVMe drives in the server box, should network storage be more focused on SAS Flash or just SAS HDDs?

A. Not necessarily. NVMe over Fabric, Fibre Channel and iSCSI are also types of networked storage that will likely retain significant market share relative to SAS.

Q. Are the ‘Big Data’ Data Warehouse applications starting to use the persistence memory and domain technologies in their applications?

A. It is too early to see much of this yet. PM technologies might become a priority as a staging area for analytic applications with high ingest or checkpoint rates. NVDIMMs are likely to be too expensive to store anything “big” for quite a while.

Q. Also, is the persistence memory/domains being used in the Hyper-converged and Converged hardware infrastructures?

A. Persistent memory is quintessentially (Hyper-) converged. It wouldn’t be unreasonable to expect some traction with hyper-converged solutions that experience high storage-performance demand.

Q. What distance would you associate with 10’s of microseconds?

A. In terms of transmission delay, 10’s of uS align with a campus or small city scale, but the distance itself is often not the primary factor. Switching delays, transmission line properties and software overhead are generally bigger factors.

Q. So latency would be the binding factor for distances…not a question, an observation.

A. Yes, in effect, either through transmission or relay. See above.

Q. Aren’t there multi-threaded SSDs?

A. Yes, but since the primary metric in this presentation is latency we ignore multi-threading. It can enable more work to get done, but it generally increases latency rather than reducing it.

Q. Is Pmalloc universal usage?

A. The term is starting to be recognized among developers and has been used in research. Various similar names have been used in early research prototypessuch as pmalloc in Mnemosyne and nvmalloc in SCMFS.

Q. So how would PM help in a (stock broking) requirement, where we currently prophesize an RDMA or iWARP solution?

A. With PM the answer is always lower latency. PM can be litegrated like memory or like flash. RDMA network paths for both of these options were discussed in the presentation. In either case, PM is low-latency enough that networking and software overheads will completely determine performance, even when using RDMA. The performance boost from PM is greatest when it is accessed locally. If remote access is a requirement then the new work being done in the RDMA community should help.

Q. If data stored in memory requires to be copied to a different host, memory (for consistency) how does PM assist, or is there an extension to PM? Coherency between multiple hosts in a cluster, if you will?

A. PM technology does not help with this; the methods of managing consistency across hosts remain unchanged by PM. All PM offers is low latency persistence.

Coordination across hosts or nodes in a cluster must use existing clustering techniques such as locking and quorums. In addition, the relative timescales of memory access and network communication suggest the application of asynchronous remote replication techniques used in today’s storage solutions.

Regarding coherency, PM brings nothing new to the known techniques for managing coherency. Classical cluster architecture must be applied outside of symmetric multi-processing coherency domains. Within coherency domains, all of the logic is above the PM level in a processor side memory controller or a software emulation of the same algorithms.