Category: data center

Why Object Storage is Important

It’s a Wrap! SNIA’s 20th Storage Developer Conference a Success!

Reviews are in for the 20th Storage Developer Conference (SDC) and they are thumbs up! The 2017 SDC was the largest ever- expanding to four full days with seven keynotes, five SNIA Tutorials, and 92 sessions. The SNIA Technical Council, who oversees conference content, compiled a rich agenda of 18 topic categories  focused on Read More

focused on Read More

SNIA Activities in Security, Containers, and File Storage on Tap at Three Bay Area Events

SNIA will be out and about in February in San Francisco and Santa Clara, CA, focused on their security, container, and file storage activities.

February 14-17 2017, join SNIA in San Francisco at the RSA Conference in the OASIS Interop: KMIP & PKCS11 booth S2115. OASIS and SNIA member companies will be demonstrating OASIS Key Management Interoperability Protocol (KMIP) through live interoperability across all participants. SNIA representatives will be on hand in the booth to answer questions about the Storage Security Industry Forum KMIP Conformance Test Program, which enables organizations with KMIP implementations to validate the protocol conformance of those products and meet market requirements for secure, plug-and-play storage solutions. And Eric Hibbard, Chair of the SNIA Security Technical Work Group and CTO Security and Privacy, HDS Corporation, will participate in the “Can I Get a Witness? Technical Witness Bootcamp” session on February 17.

February 14-17 2017, join SNIA in San Francisco at the RSA Conference in the OASIS Interop: KMIP & PKCS11 booth S2115. OASIS and SNIA member companies will be demonstrating OASIS Key Management Interoperability Protocol (KMIP) through live interoperability across all participants. SNIA representatives will be on hand in the booth to answer questions about the Storage Security Industry Forum KMIP Conformance Test Program, which enables organizations with KMIP implementations to validate the protocol conformance of those products and meet market requirements for secure, plug-and-play storage solutions. And Eric Hibbard, Chair of the SNIA Security Technical Work Group and CTO Security and Privacy, HDS Corporation, will participate in the “Can I Get a Witness? Technical Witness Bootcamp” session on February 17.

The following week, February 21-23, join SNIA at Container World in Santa Clara CA. Enabling access to memory is an important concern to container designers, and Arthur Sainio, SNIA NVDIMM Special Interest Group Co-Chair from SMART Modular, will speak on Boosting Performance of Data Intensive Applications via Persistent Memory. Integrating containers into legacy solutions will be a focus of a panel where Mark Carlson, SNIA Technical Council Co-Chair from Toshiba, will speak on Container Adoption Paths into Legacy Infrastructure. SNIA experts will be joined by other leaders in the container ecosystem like Docker, Twitter, ADP, Google, and Expedia . The SNIA booth will feature cloud infrastructure and storage discussions and a demonstration of a multi-vendor persistent memory solution featuring NVDIMM! (P.S. – Are you new to containers? Get a head start on conference discussions by checking out a December 2016 SNIA blog on Containers, Docker, and Storage.)

The following week, February 21-23, join SNIA at Container World in Santa Clara CA. Enabling access to memory is an important concern to container designers, and Arthur Sainio, SNIA NVDIMM Special Interest Group Co-Chair from SMART Modular, will speak on Boosting Performance of Data Intensive Applications via Persistent Memory. Integrating containers into legacy solutions will be a focus of a panel where Mark Carlson, SNIA Technical Council Co-Chair from Toshiba, will speak on Container Adoption Paths into Legacy Infrastructure. SNIA experts will be joined by other leaders in the container ecosystem like Docker, Twitter, ADP, Google, and Expedia . The SNIA booth will feature cloud infrastructure and storage discussions and a demonstration of a multi-vendor persistent memory solution featuring NVDIMM! (P.S. – Are you new to containers? Get a head start on conference discussions by checking out a December 2016 SNIA blog on Containers, Docker, and Storage.)

Closing out February, find SNIA at their booth at USENIX FAST from February 27-March 2 in Santa Clara, CA, where you can engage with SNIA Technical Council leaders on the latest activities in file and storage technologies.

Closing out February, find SNIA at their booth at USENIX FAST from February 27-March 2 in Santa Clara, CA, where you can engage with SNIA Technical Council leaders on the latest activities in file and storage technologies.

We look forward to seeing you at one (or more) of these events!

We’ve Been Thinking…What Does Hyperconverged Mean to Storage?

Here at the SNIA Ethernet Storage Forum (ESF), we’ve been discussing how hyperconverged adoption will impact storage. Converged Infrastructure (CI), Hyperconverged Infrastructure (HCI), along with Cluster or Cloud In a Box (CIB) are popular trend topics that have gained both industry and customer adoption. As part of data infrastructures, CI, HCI, and CIB enable simplified deployment of resources (servers, storage, I/O networking, hypervisor, application software) across different environments.

But what do these approaches mean for the storage environment? What are the key concerns and considerations related specifically to storage? How will the storage be connected to (or included in) the platform? Who will protect and backup the data? And most importantly, how do you know that you’re asking the right questions in order to get to the right answers?

Find out on March 15th in a live SNIA-ESF webcast, “What Does Hyperconverged Mean to Storage.” We’ve invited expert Greg Schulz, founder and analyst of Server StorageIO, to answer the questions we’ve been debating. Join us, as Greg will move beyond the hype (pun intended) to discuss:

- What are the storage considerations for CI, CIB and HCI

- Why fast applications and fast servers need fast I/O

- Networking and server-storage I/O considerations

- How to avoid aggravation-causing aggregation (bottlenecks)

- Aggregated vs. disaggregated vs. hybrid converged

- Planning, comparing, benchmarking and decision-making

- Data protection, management and east-west I/O traffic

- Application and server north-south I/O traffic

Register today and please bring your questions. We’ll be on-hand to answer them during this event. We hope to see you there!

Buffers, Queues, and Caches, Oh My!

Buffers and Queues are part of every data center architecture, and a critical part of performance – both in improving it as well as hindering it. A well-implemented buffer can mean the difference between a finely run system and a confusing nightmare of troubleshooting. Knowing how buffers and queues work in storage can help make your storage system shine.

However, there is something of a mystique surrounding these different data center components, as many people don’t realize just how they’re used and why. Join our team of carefully-selected experts on February 14th in the next live webcast in our “Too Proud to Ask” series, “Everything You Wanted to Know About Storage But Were Too Proud To Ask – Part Teal: The Buffering Pod” where we’ll demystify this very important aspect of data center storage. You’ll learn:

- What are buffers, caches, and queues, and why you should care about the differences?

- What’s the difference between a read cache and a write cache?

- What does “queue depth” mean?

- What’s a buffer, a ring buffer, and host memory buffer, and why does it matter?

- What happens when things go wrong?

These are just some of the topics we’ll be covering, and while it won’t be exhaustive look at buffers, caches and queues, you can be sure that you’ll get insight into this very important, and yet often overlooked, part of storage design.

Register today and spend Valentine’s Day with our experts who will be on-hand to answer your questions on the spot!

SNIA Puts the You in YouTube

Did you know that SNIA has a YouTube Channel? SNIAVideo is the place designed for You to visit for the latest technical and educational content – all free to download – from SNIA thought leaders and events.

Our latest videos cover a wide range of topics discussed at last month’s SNIA Storage Developer Conference. Enjoy The Ride Cast video playlist where industry expert Marc Farley (@GoFarley) motors around Silicon Valley with SNIA member volunteers Richelle Ahlvers(@rahlvers), Stephen Bates (@stepbates), Mark Carlson(@macsun), and storage and solid state technology analysts Tom Coughlin (@ThomasaCoughlin), and Jim Handy chatting about persistent memory, SNIA Swordfish, NVMe, storage end users, and more. You’ll also want to check out onsite interviews from Kinetic open storage project participants Seagate, Scality, and Open vStorage on their experiences at an SDC solutions plugfest.

Featured on the SNIAVideo YouTube Channel are SNIA thought leaders weighing in on the trends and activities that will revolutionize enterprise data centers and consumer applications over the next decade. New ways to unify the management of storage and servers in hyperscale and cloud environments; an ecosystem driving system memory and storage into a single, unified “persistent memory” entity; and how security is being managed at enterprises today are just a few of the topics covered by speakers from Microsoft, Intel, Toshiba, Cryptsoft, and more.

Bookmark our site and return often for fresh, new content on how SNIA helps You understand and solve the thorny storage issues facing your career and your organization.

Bookmark our site and return often for fresh, new content on how SNIA helps You understand and solve the thorny storage issues facing your career and your organization.

SNIA Storage Developer Conference-The Knowledge Continues

SNIA’s 18th Storage Developer Conference is officially a success, with 124 general and breakout sessions; Cloud Interoperability, Kineti c Storage, and SMB3 plugfests; ten Birds-of-a-Feather Sessions, and amazing networking among 450+ attendees. Sessions on NVMe over Fabrics won the title of most attended, but Persistent Memory, Object Storage, and Performance were right behind. Many thanks to SDC 2016 Sponsors, who engaged attendees in exciting technology discussions.

c Storage, and SMB3 plugfests; ten Birds-of-a-Feather Sessions, and amazing networking among 450+ attendees. Sessions on NVMe over Fabrics won the title of most attended, but Persistent Memory, Object Storage, and Performance were right behind. Many thanks to SDC 2016 Sponsors, who engaged attendees in exciting technology discussions.

For those not familiar with SDC, this technical industry event is designed for a variety of storage technologists at various levels from developers to architects to product managers and more. And, true to SNIA’s commitment to educating the industry on current and future disruptive technologies, SDC content is now available to all – whether you attended or not – for download and viewing.

You’ll want to stream keynotes from Citigroup, Toshiba, DSSD, Los Alamos National Labs, Broadcom, Microsemi, and Intel – they’re available now on demand on SNIA’s YouTube channel, SNIAVideo.

You’ll want to stream keynotes from Citigroup, Toshiba, DSSD, Los Alamos National Labs, Broadcom, Microsemi, and Intel – they’re available now on demand on SNIA’s YouTube channel, SNIAVideo.

All SDC presentations are now available for download; and over the next few months, you can continue to download SDC podcasts which combine audio and slides. The first podcast from SDC 2016 – on hyperscaler (as well as all 2015 SDC Podcasts) are available here, and more will be available in the coming weeks.

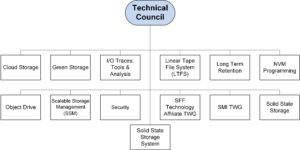

SNIA thanks all its members and colleagues who contributed to make SDC a success! A special thanks goes out to the SNIA Technical Council, a select group of acknowledged industry experts who work to guide SNIA technical efforts. In addition to driving the agenda and content for SDC, the Technical Council oversees and manages SNIA Technical Work Groups, reviews architectures submitted by Work Groups, and is the SNIA’s technical liaison to standards organizations. Learn more about these visionary leaders at http://www.snia.org/about/organization/tech_council.

And finally, don’t forget to mark your calendars now for SDC 2017 – September 11-14, 2017, again at the Hyatt Regency Santa Clara. Watch for the Call for Presentations to open in February 2017.

The Changing World of SNIA Technical Work – A Conversation with Technical Council Chair Mark Carlson

Mark Carlson is the current Chair of the SNIA Technical Council (TC). Mark has been a SNIA member and volunteer for over 18 years, and also wears many other SNIA hats. Recently, SNIA on Storage sat down with Mark to discuss his first nine months as the TC Chair and his views on the industry.

Mark Carlson is the current Chair of the SNIA Technical Council (TC). Mark has been a SNIA member and volunteer for over 18 years, and also wears many other SNIA hats. Recently, SNIA on Storage sat down with Mark to discuss his first nine months as the TC Chair and his views on the industry.

SNIA on Storage (SoS): Within SNIA, what is the most important activity of the SNIA Technical Council?

Mark Carlson (MC): The SNIA Technical Council works to coordinate and approve the technical work going on within SNIA. This includes both SNIA Architecture (standards) and SNIA Software. The work is conducted within 13 SNIA Technical Work Groups (TWGs). The members of the TC are elected from the voting companies of SNIA, and the Council also includes appointed members and advisors as well as SNIA regional affiliate advisors.

SoS: What has been your focus this first nine months of 2016?

MC: The SNIA Technical Council has overseen a major effort to integrate a new standard organization into SNIA. The creation of the new SNIA SFF Technology Affiliate (TA) Technical Work Group has brought in a very successful group of folks and standards related to storage connectors and transceivers. This work group, formed in June 2016, carries forth the longstanding SFF Committee work efforts that has operated since 1990 until mid-2016. In 2016, SFF Committee leaders transitioned the organizational stewardship to SNIA, to operate under a special membership class named Technology Affiliate, while retaining the long standing technical focus on specifications in a similar fashion as all SNIA TWGs do.

SoS: What changes did SNIA implement to form the new Technology Affiliate membership class and why?

MC: The SNIA Policy and Procedures were changed to account for this new type of membership. Companies can now join an Affiliate TWG without having to join SNIA as a US member. Current SNIA members who want to participate in a Technology Affiliate like SFF can join a Technology Affiliate and pay the separate dues. The SFF was a catalyst – we saw an organization looking for a new home as its membership evolved and its leadership transitioned. They felt SNIA could be this home but we needed to complete some activities to make it easier for them to seamlessly continue their work. The SFF is now fully active within SNIA and also working closely with T10 and T11, groups that SNIA members have long participated in.

SoS: Is forming this Technology Affiliate a one-time activity?

MC: Definitely not. The SNIA is actively seeking organizations who are looking for a structure that SNIA provides with IP policies, established infrastructure to conduct their work, and 160+ leading companies with volunteers who know storage and networking technology.

SoC: What are some of the customer pain points you see in the industry?

MC: Critical pain points the TC has started to address with new TWGs over the last 24 months include: performance of solid state storage arrays, where the SNIA Solid State Storage Systems (S4) TWG is working to identify, develop, and coordinate system performance standards for solid state storage systems; and object drives, where work is being done by the Object Drive TWG to identify, develop, and coordinate standards for object drives operating as storage nodes in scale out storage solutions. With the number of different future disk drive interfaces emerging that add value from external storage to in-storage compute, we want to make sure they can be managed at scale and are interoperable.

SoS: What’s upcoming for the next six months?

MC: The TC is currently working on a white paper to address data center drive requirements and the features and existing interface standards that satisfy some of those requirements. Of course, not all the solutions to these requirements will come from SNIA, but we think SNIA is in a unique position to bring in the data center customers that need these new features and work with the drive vendors to prototype solutions that then make their way into other standards efforts. Features that are targeted at the NVM Express, T10, and T13 committees would be coordinated with these customers.

SoS: Can non-members get involved with SNIA?

MC: Until very recently, if a company wanted to contribute to a software project within SNIA, they had to become a member. This was limiting to the community, and cut off contributions from those who were using the code, so SNIA has developed a convenient Contributor License Agreement (CLA) for contributions to individual projects. This allows external contributions but does not change the software licensing. The CLA is compatible with the IP protections that the SNIA IP Policy provides to our members. Our hope is that this will create a broader community of contributors to a more open SNIA, and facilitate open source project development even more.

SoS: Will you be onsite for the upcoming SNIA Storage Developer Conference (SDC)?

MC: Absolutely! I look forward to meeting SNIA members and colleagues September 19-22 at the Hyatt Regency Santa Clara. We have a great agenda, now online, that the TC has developed for this, our 18th conference, and registration is now open. SDC brings in more than 400 of the leading storage software and hardware developers, storage product and solution architects, product managers, storage product quality assurance engineers, product line CTOs, storage product customer support engineers, and in–house IT development staff from around the world. If technical professionals are not familiar with the education and knowledge that SDC can provide, a great way to get a taste is to check out the SDC Podcasts now posted, and the new ones that will appear leading up to SDC 2016.

Podcasts Bring the Sounds of SNIA’s Storage Developer Conference to Your Car, Boat, Train, or Plane!

SNIA’s Storage Developer Conference (SDC) offers exactly what a developer of cloud, solid state, security, analytics, or big data applications is looking for – rich technical content delivered in a no-vendor bias manner by today’s leading technologists. The 2016 SDC agenda is being compiled, but now you can get a “sound bite” of what to expect by downloading SDC podcasts via iTunes, or visiting the SDC Podcast site at http://www.snia.org/podcasts to download the accompanying slides and/or listen to the MP3 version.

can get a “sound bite” of what to expect by downloading SDC podcasts via iTunes, or visiting the SDC Podcast site at http://www.snia.org/podcasts to download the accompanying slides and/or listen to the MP3 version.

Each podcast has been selected by the SNIA Technical Council from the 2015 SDC event, and include topics like:

- Preparing Applications for Persistent Memory from Hewlett Packard Enterprise

- Managing the Next Generation Memory Subsystem from Intel Corporation

- NVDIMM Cookbook – a Soup to Nuts Primer on Using NVDIMMs to Improve Your Storage Performance from AgigA Tech and Smart Modular Systems

- Standardizing Storage Intelligence and the Performance and Endurance Enhancements It Provides from Samsung Corporation

- Object Drives, a New Architectural Partitioning from Toshiba Corporation

- Shingled Magnetic Recording- the Next Generation of Storage Technology from HGST, a Western Digital Company

- SMB 3.1.1 Update from Microsoft

Eight podcasts are now available, with new ones added each week all the way up to SDC 2016 which begins September 19 at the Hyatt Regency Santa Clara. Keep checking the SDC Podcast website, and remember that registration is now open for the 2016 event at http://www.snia.org/events/storage-developer/registration. The SDC conference agenda will be up soon at the home page of http://www.storagedeveloper.org.

Enjoy these great technical sessions, no matter where you may be!